The Pitfall of Local Optimization

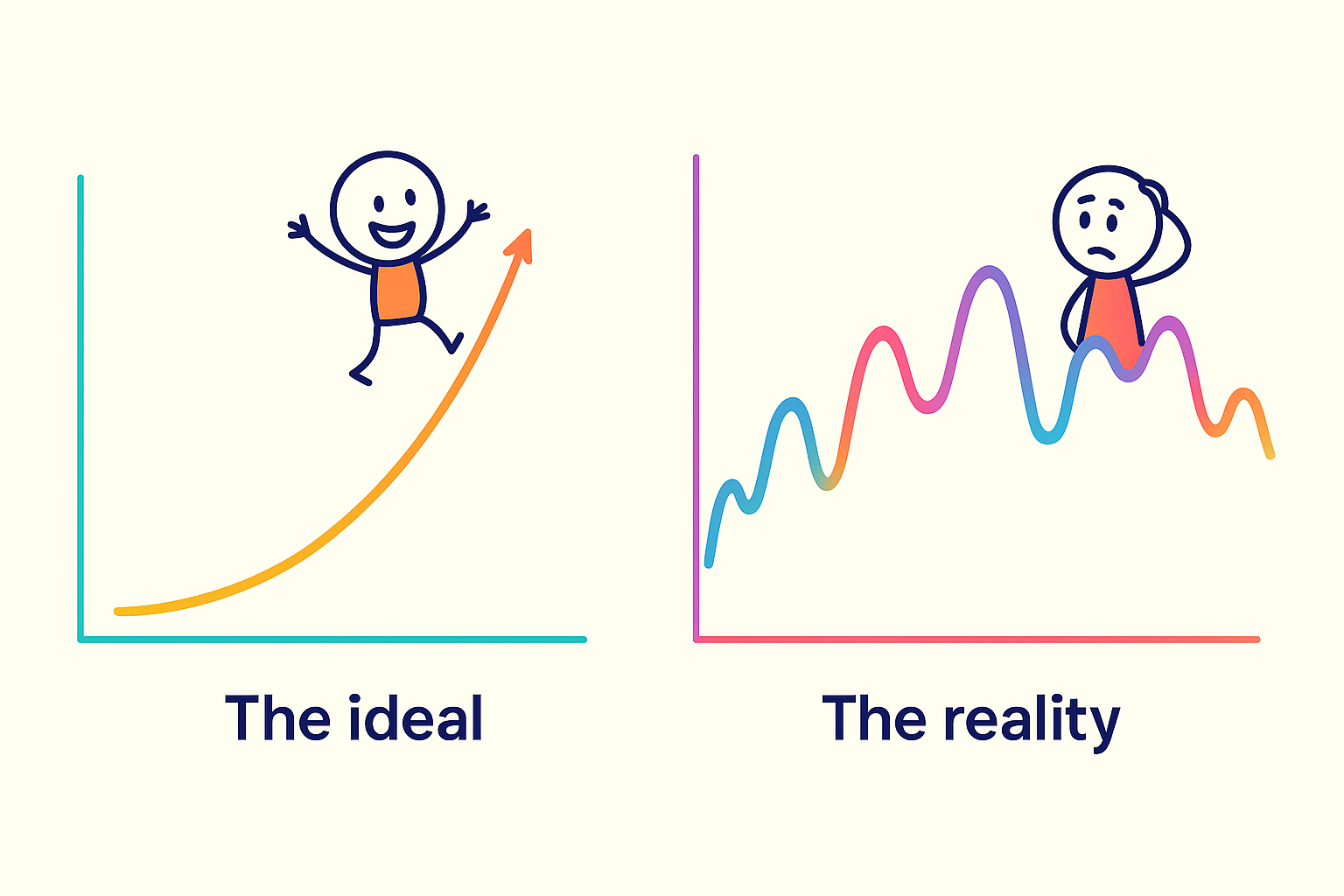

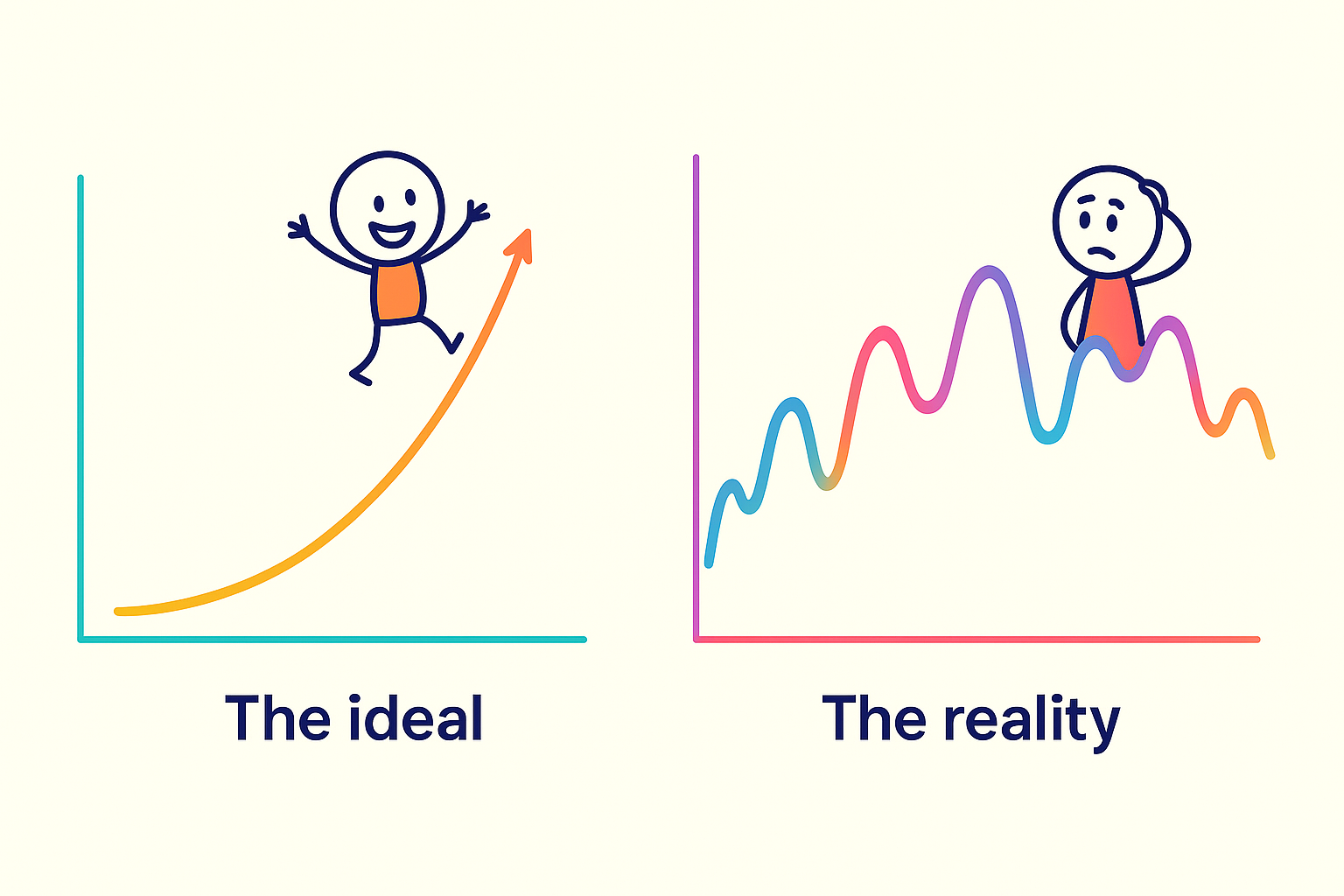

I'm obsessed with optimization as an engineer. But here's the trap: improving one thing every day doesn't guarantee you'll reach your goals. When you've done everything right but you're still nowhere close, what you need is the big picture you've been ignoring.

Optimization. Oh, how I love thee. I'm obsessed with the efficient usage of computational resources whenever I code.

It's not just for engineering though. In a broader sense, it's all about creating better outcomes using the same or fewer resources. Among all the optimization algorithms, the most straightforward one is probably the hill climbing algorithm, but in a general sense: score the current system, tune some variables, reevaluate again and see if the score goes up, repeat. It's a well-known issue in the computing world that it will converge at a local maximum, unless the target function is simple enough to be linear or just have one peak. Reality is never that simple.

How does that translate outside the computing world, though?

- Parents who believe as long as their children's grades keep going up they will be thriving.

- A tennis player who believes as long as they win every point they can win every competition, but ends up losing. (Roger Federer won 54% of points while winning 81% of his games, btw)

- A chess player who is obsessed with a perfect winning streak ends up stuck in the entry tier since that's where he gets to adore his legend.

- A business that strives for growth by optimizing their upper funnel by running enormous A/B tests ends up stuck nowhere near the original revenue goal.

- A diligent worker who saves every penny they can, believing that it's the way to reach F.I.R.E., ends up never living their ideal life despite having boatloads of money in their bank account.

These examples all sound different, but the core idea is the same: the false assumption that by improving on one dimension every day, one would eventually reach their goals.

This is another aspect of Donald Knuth's famous quote, "Premature optimization is the root of all evil" that's probably not being touched on often enough –– Local optimization is the root of all frustration. The frustration that you are not even close to what you want despite having done everything right.

What lies deep down is a lack of the big picture. Ignoring the combinatorial effects of a system leads to the false mindset that a system's value equals the sum of the values of its parts. It is like saying the worth of the Mona Lisa equals the sum of the canvas and the paints.

That mindset then grows two siblings: local optimization, and the tendency to fix the immediate symptoms rather than examining the whole system. This function would crash when it got a null pointer, so let's handle that case specifically rather than researching why there would be a null pointer in the first place and seeing if we could restrict the type further; these students scored lower than the average, so let's punish them by tripling their homework rather than understanding where they got stuck; there have been 5 car accidents at this crossroad, so let's set up more cameras and increase the fine, rather than re-evaluating the design of that junction. The list can go on. There are certainly cases where local optimization and quick patching are legitimate, but it has to be a conscious choice based on a thorough understanding of the whole system.

When there is no small problem, there is no big problem. When we move the needle every day, we will reach our goal. I sincerely wish life could be that easy.

So let's keep an eye on the big picture, think in the longer term, and understand the system as a whole. As an engineer, architect your code in a way that cleanly conveys the spec before tuning here and there. As a leader, prioritize the vision and the strategy towards it, not the ad-hoc small tweaks in-between. As a person, know that it is absolutely normal, or even absolutely necessary, to have downs in life.